Phase interactions are well known for their ability to destructively interfere with recorded signals, but an understanding of the process can turn it into one of the most powerful creative tools available to you.

There's more to decent mic technique than knowing how different mics work and what directions musical instruments sound good from, because the moment you use two or more mics simultaneously, you'll find that their recorded signals don't simply add together — they can also subtract and interact in complex and sometimes counter-intuitive ways. To understand why this is, you need to grasp the concept of phase and how it applies to different miking setups — which, conveniently, is what this article is all about!

There's more to decent mic technique than knowing how different mics work and what directions musical instruments sound good from, because the moment you use two or more mics simultaneously, you'll find that their recorded signals don't simply add together — they can also subtract and interact in complex and sometimes counter-intuitive ways. To understand why this is, you need to grasp the concept of phase and how it applies to different miking setups — which, conveniently, is what this article is all about!

Simple Sine Waves

Let's start with a sine wave — the simplest audio waveform there is. Every other audio waveform can theoretically be broken down into a collection of sine waves at different frequencies, so by dealing with the concept of phase in terms of sine waves first, we can extrapolate to how they affect the more complicated real-world audio signals you'll find coming out of the back end of a mic.

A sine wave generates only a single audio frequency, according to how many times its waveform shape repeats in a second. For example, a 1kHz sine wave repeats its waveform 1000 times per second, with each waveform repetition lasting 1ms. Imagine that you have two mixer channels, each fed from the same sine-wave source at the same frequency. The peaks and troughs of the two waveforms will be exactly in line, and mixing them together will simply produce the same sine wave, only louder. In this situation we talk about the two sine waves being 'in phase' with each other.

If you gradually delay the audio going through the second channel, however, the peaks and troughs of the two sine waves shift out of alignment. Because of the unique properties of sine waves, the combination of the two channels will now still produce a sine wave of the same frequency, but its level will be lower than if the two channels were in phase, and we say that partial phase cancellation has occurred. When the second channel is delayed such that its peaks coincide exactly with the first channel's troughs (and vice versa), the two waveforms will combine to produce silence. At this point we say that the waveforms are completely 'out of phase' with each other and that total phase cancellation has occurred.

When total phase cancellation occurs, you sometimes hear engineers say that the signals are '180 degrees out of phase'. This is a phrase that's not always used correctly, and it can therefore be a bit confusing. In order to describe the phase relationship between two identical waveforms, regardless of their frequency (that is, how fast they're repeating), mathematicians often quantify the offset between them in degrees, where 360 degrees equals the duration of each waveform repetition. Therefore, a zero-degree phase relationship between two sine waves makes them perfectly in phase, giving the highest combined signal level, whereas a 180-degree phase relationship puts them perfectly out of phase, resulting in total phase cancellation — and therefore silence as the combined output. All the other possible phase relationships put the waveforms partially out of phase with each other, resulting in partial phase cancellation.

What's confusing about the '180 degrees out of phase' term is that it is sometimes used to refer to a situation where the second channel has not been delayed, but has had its waveform flipped upside down, so that the peaks become troughs and vice versa — a process more unambiguously referred to as polarity reversal. This scenario also results in silence at the combined output, hence the common confusion in terminology, but it's very important to realise that the total phase cancellation here is brought about by inverting one of the waveforms, not by delaying it. In this example, it might seem like we're splitting hairs, but in practice the distinction between time delays and polarity becomes much more important.

Comb Filtering In The Digital Studio

It's easy to attune your ear to the effects of simple comb filtering by putting the same audio onto two tracks in your sequencer and inserting a plug-in delay on one of the channels. It's very useful to familiarise yourself with the kinds of sounds this produces, because the sound of comb filtering is often the first indicator of a variety of undesirable delay and routing problems in digital systems.

For example, if you're using some kind of 'zero-latency' direct monitoring on your audio interface, you'll hear anything you're recording direct without any appreciable delay at all. If you forget to switch off software monitoring within your sequencer while you're doing this, though, you'll also hear another version of what you're recording, delayed according to the latency of your soundcard's audio drivers — and this will phase-cancel with the direct version.

Another phase-related problem I've seen is where people are recording to and monitoring from their computer via a hardware mixer, and mistakenly route the computer's output signal back to its input for recording, alongside whatever else they want to record. This creates a feedback loop, where the audio is delayed by the soundcard's latency every time it goes around the loop. If the loop as a whole increases the gain of the signal going around it, you'll quickly know about it because a shrieking feedback 'howlround' will develop, but if the feedback loop decreases the gain, you'll get a nasty-sounding comb-filtering instead, which should let you know that something is amiss.

If your sequencer doesn't implement plug-in delay compensation, that's another potentially rich source of phase-cancellation problems. In this case, if you try to set up a send effect and you forget to set its mix control to 100 percent wet, some of the unprocessed audio will be delayed on its return to the mix, by the plug-in's processing latency. Most send effects incorporate some element of delay into them anyway, which means that the additional processing delay is rarely a problem if the mix control of the send effect is set to 100 percent wet — think of it as a bit of bonus pre-delay chucked in for free! However, if you use non delay-based processes such as compression or distortion as send effects, then phase cancellation becomes a real problem without plug-in delay compensation, irrespective of the setting of any mix control.

Even if your recording software compensates for plug-in delays, you'll still need to be on your guard, because some plug-ins don't properly declare their processing latency to the host application and they therefore defeat the sequencer's attempts to compensate for it — so please make sure you use your ears! Some external processing cards can also cause problems with software plug-in delay compensation systems, and if you send out to any external hardware effects using spare connections on your audio interface this will also introduce delays through the audio interface (and perhaps through the external unit itself). Some sequencers include facilities to measure the delay and compensate for it (such as Cubase's External FX facility), some just to measure it, and others lack this facility. In short, it pays to learn what phase cancellation sounds like so that you can avoid it tampering with the sounds of your mixes.

Moving Into The Real World

Let's scale things back up to deal with real-world sounds, made up as they are of heaps of different sine waves at different frequencies, each one fading in and out as the pitch and timbre change. If we feed a drum loop to our two mixer channels, instead of a single sine wave, any delay in the second channel will have a dramatic effect on the tonality of the combined signal, rather than just altering its level. The reason for this is that, for a given delay, the phase relationships between sine waves on the first channel and those on the second channel depends on the frequency of each individual sine wave. So, for example, a 0.5ms delay in the second channel will put any 1kHz sine-wave components (the waveforms of which repeat every 1ms) 180 degrees out of phase with those on the first channel, resulting in total phase cancellation. On the other hand, any 2kHz sine wave components (the waveforms of which repeat every 0.5ms) will remain perfectly in phase. As the frequency of the sine wave components increases from 1kHz to 2kHz, the total phase cancellation becomes only partial, and the level increases towards the perfect phase alignment at 2kHz.

Of course, above 2kHz the sine wave components begin partially phase-cancelling again, and if you're quick with your mental arithmetic you'll have spotted that total phase cancellation will also occur at 3kHz, 5kHz, 7kHz, and so on up the frequency spectrum, while at 4kHz, 6kHz, 8Hz, and so, on the sine-wave components will be exactly in phase. This produces a characteristic series of regularly-spaced peaks and troughs in the combined frequency response of our drum loop — an effect called comb filtering (see the box below).

A delay of just 0.000025s (a 40th of a millisecond) between the two channels will cause total phase cancellation at 20kHz, but you'll also hear partial phase cancellation at frequencies below this. As the delay increases, the comb-filter response marches further down the frequency spectrum, trailing its pattern of peaks and troughs behind it, which themselves get closer and closer together. However, when the delay times reach beyond about 25ms or so (depending on the sound in question), our ears start to discern the higher frequencies of the delayed signal as distinct echoes, rather than as a timbral change, and as the delay time increases phase cancellation is restricted to progressively lower frequencies.

Phase Considerations For Single-mic Recordings

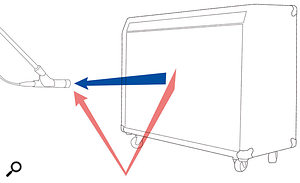

Given that sound takes roughly a millisecond to travel a foot, it's easy to see how recording the same instrument with more than one mic can quickly lead to phase-cancellation problems if the mics are at different distances from the sound source. However, even if all we ever did in the studio was record with a single mic, phase cancellation would still affect our recording because of the way sound reflects from solid surfaces such as walls. For example, if you close-mic an electric guitar cabinet, a significant minority of the sound picked up will actually be reflections from the floor. If the distance from the cabinet's speaker cone is only six inches, and the floor is a foot below the mic, the direct and reflected sounds of the cone will meet at the mic capsule with around 1.5ms delay between them. In theory this will give a comb-filtering effect with total phase cancellation at around 300Hz, 900Hz, 1.5kHz, 2.1kHz, and so on.

The complex waveform (Graph A) is made up of the simple component sine waves shown on graphs B1, B2, and B3.But it doesn't work out exactly like this, for a number of reasons. For a start, the reflected sound will almost certainly have a slightly different timbre by virtue of the sound-absorption characteristics of the floor. Sonic reflections will also arrive at the mic capsule off-axis, which will alter their frequency balance. Then there's the contribution made by reflections from other nearby surfaces, which further complicate the frequency-response anomalies. However, even though you don't get a perfect comb-filtering effect in practice, reflections from the floor are still an important contributor to the sound of a close-miked cab, and many producers experiment with lifting and angling cab in relation to the floor for this reason. If you want to hear this for yourself, surf over to my article on guitar recording at www.soundonsound.com/sos/aug07/ articles/guitaramprecording.htm and have a listen to the audio files in the 'Room & Positioning' box, which demonstrate how much difference moving the cab relative to room boundaries can make.

The complex waveform (Graph A) is made up of the simple component sine waves shown on graphs B1, B2, and B3.But it doesn't work out exactly like this, for a number of reasons. For a start, the reflected sound will almost certainly have a slightly different timbre by virtue of the sound-absorption characteristics of the floor. Sonic reflections will also arrive at the mic capsule off-axis, which will alter their frequency balance. Then there's the contribution made by reflections from other nearby surfaces, which further complicate the frequency-response anomalies. However, even though you don't get a perfect comb-filtering effect in practice, reflections from the floor are still an important contributor to the sound of a close-miked cab, and many producers experiment with lifting and angling cab in relation to the floor for this reason. If you want to hear this for yourself, surf over to my article on guitar recording at www.soundonsound.com/sos/aug07/ articles/guitaramprecording.htm and have a listen to the audio files in the 'Room & Positioning' box, which demonstrate how much difference moving the cab relative to room boundaries can make.

Of course, phase cancellation between direct and reflected sound can cause problems when recording any instrument, and with acoustic instruments it becomes, if anything, more troublesome — listeners tend to have a less concrete expectation of how an electric guitar should sound, so phase cancellation can be used to shape the tone to taste, whereas with acoustic instruments the listener tends to have clearer expectations, so the tonal effects of comb-filtering are usually less acceptable. Fortunately, it's not too difficult to avoid problems like this, as long as you try to keep performers and microphones at least a few feet from room boundaries and other large reflective surfaces. This can be a bit trickier where space is limited, in which case it can also help to use soft furnishings or acoustic foam to intercept the worst of the room reflections. Our extensive DIY acoustics feature in SOS December 2007 (www.soundonsound.com/sos/dec07/articles/acoustics.htm) has lots of useful advice if you find yourself in this situation. Another thing to try in smaller rooms is boundary mics, because their design gets around the phase-cancellation problems associated with whichever surface they're mounted on.

Combining Mics & DIs

A number of instruments are routinely recorded both acoustically and electrically, simultaneously. For example, electric bass is often recorded via both a DI box and a miked amp, while an acoustic guitar's piezo pickup system might be recorded alongside the signal from a condenser mic placed in front of the instrument, in some situations. In such cases, the waveform of the DI or pickup recording will precede the miked signal because of the time it takes for sound to travel from the cabinet speaker or instrument to the mic. The resultant phase cancellation can easily wreck the recording.

A quick fix for this is to invert the polarity of one of the signals, and see if this provides a more usable tone. It's almost as easy to tweak the miking distance for more options if neither polarity setting works out. A better method, though, is to re-align the two signals in some way by delaying the DI or pickup channel, either using an effect unit during recording, or by shifting one of the recorded tracks after the fact using your sequencer's audio-editing tools.

Multi-miking Single Instruments

If you use more than one mic to record a single instrument, the simplest way to minimise the effects of phase cancellation is to get the mic capsules physically as close together as possible — what is often called coincident miking. However, given that it only takes about an 8mm difference between the capsule positions to produce a deep phase-cancellation notch at 20kHz, it pays to line up the mic capsules quite carefully — a process a lot of people refer to as 'matching the phase of the mics' or 'matching the mics for phase'. In practice, this is best done by ear, because it's often difficult to tell exactly where a mic's capsule is without taking it apart. One handy trick for doing this is to invert the polarity of one of the mics and then adjust the positions of the mics while the instrument plays, to achieve the lowest combined level. Once the polarity is returned to normal, the mic signals should combine with the minimum of phase cancellation.

Sine waves phase-cancel when delayed and undelayed versions of the same waveform in Graph A are mixed together. The red traces show the delayed versions of each waveform in graphs A, B1, B2 and B3. Graphs C1, C2 and C3 show the result of combining the different sine-wave components: the waves in Graph B1 only slightly phase-cancel, producing a combined sine wave of nearly twice the level of each of the individual sine waves; the waves in Graph B2 phase-cancel more heavily, producing a combined sine wave of only the same level as each of the individual sine waves; and the waves in Graph B3 are 180 degrees out of phase with each other, so completely phase-cancel.Clearly, spaced mic positions that are equidistant from a sound source will also capture its direct sound without time delay, but some phase cancellation of reflected sounds will inevitably occur, so some adjustment of mic positions can prove useful here to find a suitable ambient timbre. However, it's worth bearing in mind that if you mic up any source of sound vibrations from in front and from behind simultaneously, the polarity of the signal from the rear mic may be inverted. This is very common when, for example, miking the front and back of an open-backed guitar cabinet or the top and bottom of a snare drum, and you'll usually find that the two mics will combine best (especially in terms of the bass response) if you compensate by using a phase-inversion switch on one of the two channels while recording.

Sine waves phase-cancel when delayed and undelayed versions of the same waveform in Graph A are mixed together. The red traces show the delayed versions of each waveform in graphs A, B1, B2 and B3. Graphs C1, C2 and C3 show the result of combining the different sine-wave components: the waves in Graph B1 only slightly phase-cancel, producing a combined sine wave of nearly twice the level of each of the individual sine waves; the waves in Graph B2 phase-cancel more heavily, producing a combined sine wave of only the same level as each of the individual sine waves; and the waves in Graph B3 are 180 degrees out of phase with each other, so completely phase-cancel.Clearly, spaced mic positions that are equidistant from a sound source will also capture its direct sound without time delay, but some phase cancellation of reflected sounds will inevitably occur, so some adjustment of mic positions can prove useful here to find a suitable ambient timbre. However, it's worth bearing in mind that if you mic up any source of sound vibrations from in front and from behind simultaneously, the polarity of the signal from the rear mic may be inverted. This is very common when, for example, miking the front and back of an open-backed guitar cabinet or the top and bottom of a snare drum, and you'll usually find that the two mics will combine best (especially in terms of the bass response) if you compensate by using a phase-inversion switch on one of the two channels while recording.

Despite the potential for phase cancellation, many producers nevertheless record instruments with two (or more) mics at different distances from the sound source. Where the two mics are comparatively close to each other, this provides some creative control over the sound, because tweaking the distance between them subtly shifts the frequencies at which the comb-filtering occurs. Inverting the polarity of one of the mics yields another whole set of timbres, switching the frequencies at which the sine-wave components in the two mic signals cancel and reinforce, so the potential for tonal adjustment via multi-miking is enormous.

The severity of the comb-filtering is usually reduced a little here, though, because two completely different models of mic are normally selected to increase the sonic options, and if those mics are at different distances or have different polar patterns they'll also pick up different levels of room sound, both of which factors make the signals less similar. And if the mics are used at different levels in the mix it reduces the audibility of the comb-filtering as well.

Creative use of comb-filtering by multi-miking tends to work better with electric instruments, again, rather than with more 'natural' sounds. It's also easier to get a consistent tone with this technique on most electric instruments, because the cab remains stationary with relation to the two mics, whereas many players of acoustic instruments move around while performing, changing the relative distance between the instrument and the different mics in the process. Miking an acoustic guitar with one mic at the soundhole and one mic at the fretboard, for example, can make life difficult if the guitarist rotates the instrument at all while playing (and most players do), because this will change the relative distances between the instrument and the two mics, hence the delay between the mics, and hence the frequencies at which comb-filtering occurs. Recordings like this can prove a nightmare to mix, because no EQ in the world can compensate for this kind of constantly-shifting tonal balance.

Phase cancellation is still an issue to consider when using a single mic. This diagram shows how the sound from a guitar amp is captured both directly and reflected off the floor (and other surfaces), which will cause some phase cancellation — though whether that is a good or a bad thing for the sound will be a question of taste!Multi-miking tends to work better with acoustic instruments where one of the mics is significantly further away from the instrument than the other, specifically so that it captures more room ambience. Comb-filtering is reduced here because the complex combination of different reflected sounds in the room gives the ambient mic's signal a very different recorded waveform from the close mic's, and it is also common for the ambient mic to be at a lower level. Sometimes producers with a large room to hand take the second mic 20-30 feet away from the sound source, thereby increasing the delay between the mics to such an extent that the ambient mic's signal begins to be perceivable separately from the close mic's signal, sounding like an added set of early reflections rather than merging with the sound of the close mic and causing a timbral change.

Phase cancellation is still an issue to consider when using a single mic. This diagram shows how the sound from a guitar amp is captured both directly and reflected off the floor (and other surfaces), which will cause some phase cancellation — though whether that is a good or a bad thing for the sound will be a question of taste!Multi-miking tends to work better with acoustic instruments where one of the mics is significantly further away from the instrument than the other, specifically so that it captures more room ambience. Comb-filtering is reduced here because the complex combination of different reflected sounds in the room gives the ambient mic's signal a very different recorded waveform from the close mic's, and it is also common for the ambient mic to be at a lower level. Sometimes producers with a large room to hand take the second mic 20-30 feet away from the sound source, thereby increasing the delay between the mics to such an extent that the ambient mic's signal begins to be perceivable separately from the close mic's signal, sounding like an added set of early reflections rather than merging with the sound of the close mic and causing a timbral change.

If, like most owners of small studios, you don't have the luxury of a huge live room to play with, you can try taking a leaf out of producer Steve Albini's book by artificially delaying the ambient mic instead: "I'll sometimes delay the ambient microphones by a few milliseconds," he commented back in SOS September 2005, "and that has the effect of getting rid of some of the slight phasing that you hear when you have microphones at a distance and up close... When you move them far enough away they start sounding like acoustic reflections, which is what they are."

On the other hand, you could delay the closer mic's signal to line up its audio waveform with that of the more distant mic, a technique that I find most useful where the distant mic is more important to the composite sound. And there's nothing stopping you from experimenting with other intermediate delay times to experiment with comb-filtering for artistic ends — the tonal effect is much more complex than can be easily achieved with EQ.

Phase Cancellation: The Acid Test Of Transparency

There is one situation where phase cancellation can be a true friend in the studio, and that is where you want to check whether two audio files are exactly identical or not. Just line up the two files so that their waveforms are exactly in phase with each other, and then invert the polarity of one — if they're identical, you should get total phase-cancellation (in other words, complete silence) when combining them. This is an excellent way of checking whether a process that should be transparent (say, audio format conversion, or the bypass mode of a plug-in or hardware digital processor) actually is.

Stereo Mic Techniques & Mono Compatibility

When it comes to miking up more than one instrument in the same room — anything from a small drum kit to Holst's The Planets — there are two main approaches. Either you can set up a single stereo mic rig to capture the whole ensemble as they sound in the room, or you can put individual mics up in front of each musician or small group of musicians. Phase considerations are often dealt with differently in each of these situations, so I'll look at them in turn.

Stereo recordings can be captured with a mic pair in a couple of different ways: the first is to use coincident directional microphones, so that off-centre instruments create level differences between the mics; and the second is to use spaced mics, so that the sound from off-centre instruments arrives at the mics at different times. Both level differences and time differences between the left and right channels can create the illusion of stereo positioning when listening back to a recording over loudspeakers or headphones and, depending on the circumstances, both approaches (or indeed a combination of the two) can be valid.

Phase cancellation isn't necessarily a bad thing. The 'phase EQ' technique uses three mics positioned to form a triangle. The faders on the desk (or in the DAW) can then be raised or lowered for each signal, altering the phase relationship between the signals — and this can be a less intrusive alternative to conventional EQ.By now it should be clear that any spaced-mics recording method has the capacity to produce comb filtering if its two mic signals are combined. However, when you pan the two mics of a spaced stereo pair to separate loudspeakers, your ears don't simply add the two signals together to create comb filtering — they process them separately instead, using any time-delay information to judge the positions of different ensemble instruments in the stereo field.

Phase cancellation isn't necessarily a bad thing. The 'phase EQ' technique uses three mics positioned to form a triangle. The faders on the desk (or in the DAW) can then be raised or lowered for each signal, altering the phase relationship between the signals — and this can be a less intrusive alternative to conventional EQ.By now it should be clear that any spaced-mics recording method has the capacity to produce comb filtering if its two mic signals are combined. However, when you pan the two mics of a spaced stereo pair to separate loudspeakers, your ears don't simply add the two signals together to create comb filtering — they process them separately instead, using any time-delay information to judge the positions of different ensemble instruments in the stereo field.

Problems arise with this, though, if the mono compatibility of your recordings is important (as it is for many broadcast purposes), because turning stereo into mono involves directly combining the two mic signals — at which point comb filtering can wreak havoc with the tonality, despite the stereo recording sounding fine. Even if you're unconcerned about mono compatibility in principle, you could still come unstuck if you find that you want to narrow the stereo image of a spaced-pair recording at the mix — because panning the mic signals anything other than hard left and right will combine them to some extent, giving you a tonal change alongside the image width adjustment.

Where mono compatibilty is paramount, coincident stereo techniques are clearly the most suitable choice. However, a lot of engineers find that the lack of any time-difference information in such recordings makes them sound rather clinical and emotionally uninvolving, so they have come up with a number of ways of maximising the mono compatibility of spaced-pair recordings instead.

Although it might appear to be a good idea to reverse the polarity of one of the mics to achieve a better mono sound, this creates a very weird effect on the stereo recording, so this is of little use here. The simplest thing you can do, therefore, is first set up the spaced stereo pair to your satisfaction, and then quickly switch to mono monitoring and subtly adjust the distance between the mics to massage the tonal balance of the mono sound. Small mic movements will make quite large differences to the mono mix, but without making a huge difference to the stereo sound. The goal is to find mic positions that keep the tonality as consistent as possible as you flip between mono and stereo monitoring.

Another trick is to position the spaced mics equidistant from the most important elements of the ensemble (putting them exactly in the middle of the stereo picture), ensuring that they transfer to mono as well as possible. This principle is used by some engineers when setting up drum overhead mics, and is particularly associated with über-producer Glyn Johns — the idea being to place the mics equidistant from the snare drum, kick drum, or both.

The famous Decca Tree technique is an example of a different tactic: spacing the mic pair more widely and then setting up another mic between them, panned centrally. (For more on this, have a look at the second part of Hugh Robjohns' stereo mic techniques article back in SOS March 1997 (www.soundonsound.com/sos/1997_articles/mar97/stereomictechs2.html). By relying on the central mic for the majority of the recording and the left and right mics for the stereo effect, when you sum the recording to mono the sound from the central mic remains unchanged (it already was mono!), and the phase cancellation between the spaced mics makes less of an impression because they're lower in level. The down side with such techniques, though, is that the phase cancellation between the central mic and each of the other mics can make finding suitable positions for the stereo rig a bit trickier — there's no such thing as a free lunch!

A final worthwhile option is trying one of the techniques that combine time- and level-difference information by using spaced directional mics — setups such as the ORTF or NOS standards, for example. Although the sound of off-centre instruments will still arrive at the two mics at different times, the levels of the two signals will be differentiated by the mic polar patterns, which will help reduce the audibility of the comb filtering. However, that's no excuse not to still check the sound in mono while recording.

Phase Cancellation When Layering

Throughout the main body of this article I've concentrated mostly on the phase-cancellation problems that occur when two versions of the pretty much the same sound are mixed with a delay between them. However, phase-cancellation can also occur to some extent between the sine-wave components of any two similar sounds that are layered together. When layering human performances on real instruments, this is not a problem; on the contrary, when you're layering up vocal or guitar overdubs the fluctuating phase-cancellations between the different, naturally varying tracks is all part of the appeal. However, if you try to layer sampled or synthesized sounds together within a programmed track, you can encounter all sorts of pitfalls.

The first common problem occurs when you're feeding the same MIDI notes to two different sample-based instruments, either hardware or software. The sounds you select for the two different parts will play much more accurately together than would human performers, and the notes will be more uniform in tone than those of real instruments. Even if there is ostensibly no delay between the onset of the two different sounds, the phase relationships of their different sine-wave components may still be very different, and the resultant phase-cancellations won't vary in a natural way, as they would with layered live performances. This often gives a kind of 'hollowness' to the layered patch, which you'll rarely find appealing.

However, I've found that it's not too tricky to avoid unpleasant combinations in these situations as long as you steer clear of pairs of sounds which are both percussive and similar-sounding (say two different pianos) — and with things like evolving pads you can get away with all sorts of combined sounds without difficulties. You should also take care when layering sounds with prominent low frequencies (such as basses and kick drums), because it can really suck the power out of the track if the combination cancels out even a single powerful low-frequency sine-wave component. (In fact, this is as much a concern with live instruments, and accounts for the comparative rarity of layered bass sounds on record.)

The problem can be compounded if you also get a delay between the two instrument layers. You might ask 'How can this occur if I'm sending each one the same MIDI data?' The most common way it can happen is if you're running hardware sound-modules alongside a computer sequencer. For a start, the computer's internal instruments don't have to deal with the output MIDI latency of your soundcard, but there's also the fact that hardware sound modules suffer from latency too, and each one will probably have a different latency value. If you're then monitoring your hardware sound modules' outputs through spare inputs on your audio interface, you'll have to contend with further latency from the soundcard.

The biggest difficulties arise, though, when you're layering bass or kick-drum sounds and there's a delay between the two layers that varies from moment to moment. The result is a sound that is practically unmixable: the timbre of the instrument varies from note to note, and some notes completely lose their power through cancellation of important low frequencies. You'd have thought that this would be a fairly uncommon problem, but I've encountered it twice in recent Mix Rescue projects, which leads me to suspect that it might actually be quite widespread. One workaround is to use only one of the layers to supply the low frequencies, by high-pass filtering the other, but in my experience this tends to be more successful with bass sounds than with kick drums. For the latter, it makes much more sense to layer the two kick samples within a single sampler instrument (hardware or software), as this is more likely to ensure that they always trigger at exactly the same time, thereby keeping phase cancellation between the sounds, and their composite timbre, consistent.

Multi-miking Ensembles

Where phase cancellation can really mess things up is when you start miking individual instruments in an ensemble separately. You'd ideally like each instrument mic to pick up just the instrument it is pointing at, but in reality it will pick up spill from all of the instruments around it. The sound of each instrument through its own mic will, to some extent, phase-cancel with its spill on every other mic, so that moving any single mic has the potential to change the sound of the other instruments in an incredibly complex way.

There are some engineers who (by dint of golden ears, years of experience, and a pact with a certain horned gentleman) have acquired the skill of managing this mass of phase cancellations such that they can actively use spill to enhance and 'glue together' large-scale multi-mic recordings. Such luminaries are often happy to use primarily omni mics, despite the increased levels of spill, for this reason. For the rest of us mere mortals, the key to success on this kind of recording session is reducing the levels of spill as much as is feasibly possible, thereby minimising the audible effects of the comb filtering.

Phase can be a problem with stereo miking of sources that aren't stationary. An acoustic guitar player, for example, will usually move the guitar at least a little during performance — which is why closely placed coincident pairs tend to be preferred over spaced stereo techniques.There's a lot that can be done in this regard simply by careful positioning of the mics and ensemble instruments, and in general it makes sense to keep each mic closer to the instrument it is covering than to sources of spill. This idea is often encapsulated as the '3:1 rule' — namely, in order to keep spill manageably low, the distance between mics on different instruments should be at least three times the distance between each mic and the instrument it is supposed to be covering.

Phase can be a problem with stereo miking of sources that aren't stationary. An acoustic guitar player, for example, will usually move the guitar at least a little during performance — which is why closely placed coincident pairs tend to be preferred over spaced stereo techniques.There's a lot that can be done in this regard simply by careful positioning of the mics and ensemble instruments, and in general it makes sense to keep each mic closer to the instrument it is covering than to sources of spill. This idea is often encapsulated as the '3:1 rule' — namely, in order to keep spill manageably low, the distance between mics on different instruments should be at least three times the distance between each mic and the instrument it is supposed to be covering.

Although the 3:1 rule is a handy guide for some engineers, I don't personally find it to be very useful, because it doesn't take into account the differences in volume between different instruments, any acoustic factors in the room, or the effects of microphone polar patterns. Indeed, judicious baffling of instruments and some attention to room treatment are just as important for managing spill, in my experience, as mic selection and positioning. A more sensible route, in my opinion, is to work in terms of the ratio between the level of the close mic for each instrument and the total spill level picked up for that instrument by all the other mics. You can easily test this by asking each miked musician or group of musicians to play in turn with all the mics open — if muting that instrument's close mic reduces the overall mix level by around 9dB you should be pretty well in the clear.

Clearly, directional mics can make the task of reducing spill levels easier, because rejection nulls can be aimed towards neighbouring instruments — the deep side nulls of figure-of-eight mics can really come into their own here. However, all directional mics (especially less expensive models) colour off-axis sound to some extent, which on occasion causes more problems than the directivity solves, because a lesser amount of nasty-sounding spill can prove more difficult to deal with (even though it causes less phase cancellation) than a greater amount of comparatively uncoloured spill. This principle is at the heart of a useful technique (described by Mike Stavrou in his fascinating book Mixing With Your Mind), that involves miking up each instrument to get it sounding the best you can on its own, and then repositioning that instrument and its mic together (without changing their relative positions) to achieve a balanced tonality for any spill.

When miking a snare from above and below, the two signals will be out of phase, so use the polarity-invert switch on your desk (or in your DAW) on one of them.Pretty much whatever you do, though, you'll still get spill of one kind or another on most of the mics. This means that even if you get each close mic sounding fine for the instrument it's nominally assigned to, it's likely that some of these lovingly-finessed mic signals will suffer from adverse phase cancellation when all the mics are mixed together. In this kind of situation, the first thing to try is reversing the polarity of various different combinations of mics, perhaps starting with the most compromised-sounding close mics. In this situation there are no 'correct' polarity settings, so you should look for the combination that offers the best tonal balance across all the instruments of the ensemble. It's vital to remember, though, that inverting the polarity of any single mic will not just change the sound of the instrument it's pointing at, so keep a wary ear out for tonal changes to any instrument, especially those in close proximity to the mic in question.

When miking a snare from above and below, the two signals will be out of phase, so use the polarity-invert switch on your desk (or in your DAW) on one of them.Pretty much whatever you do, though, you'll still get spill of one kind or another on most of the mics. This means that even if you get each close mic sounding fine for the instrument it's nominally assigned to, it's likely that some of these lovingly-finessed mic signals will suffer from adverse phase cancellation when all the mics are mixed together. In this kind of situation, the first thing to try is reversing the polarity of various different combinations of mics, perhaps starting with the most compromised-sounding close mics. In this situation there are no 'correct' polarity settings, so you should look for the combination that offers the best tonal balance across all the instruments of the ensemble. It's vital to remember, though, that inverting the polarity of any single mic will not just change the sound of the instrument it's pointing at, so keep a wary ear out for tonal changes to any instrument, especially those in close proximity to the mic in question.

As long as you're getting a good sound on each close mic, and have been careful with the timbre and level of any spill, you should be able to find a combination of polarity switches that will give you a good ensemble sound. If the polarity switches don't do the job for a particular instrument, you probably need to work harder at adjusting the quality of that instrument's spill on some of the other mics. Quickly muting a few likely contenders can help isolate which of them are contributing the most problematic spill.

A carefully multi-miked ensemble recording can allow for a great deal of mixing control over the instrumental balance, but it should be pretty obvious by now that setting everything up can be complicated and time-consuming. However, if the ensemble is already pretty well internally balanced as it is, you can make things a bit easier for yourself by trying to capture that balance via a main stereo mic rig, and then using close mics only to support and adjust the balance as required. This allows you to reduce the mix contribution from each of the close mics, or even fade them up only one or two at a time when needed, and makes the exact nature of the comb filtering between them slightly less critical to the overall sound. The phase cancellation between the close mics and the main stereo pair then becomes the predominant focus of attention.

Set Phase To Stunning

For many home studio owners, the word 'phase' in relation to mic technique carries with it a certain whiff of mystery; equal parts magic and menace. Although phase is indeed capable of transforming duffers into diamonds and vice versa, I hope I've been able to demonstrate that the issue is comparatively straightforward to understand, and that dealing with it properly will help you get better results every time you put up a mic to record.

Variable Phase Devices

The comb filtering that occurs due to phase cancellation can often be a desirable effect, and while a simple delay can be used to 'correct' time-of-arrival distances between (for example) signals from two different microphones recording the same source, it doesn't give you control over which frequencies are being aligned.

Some devices, including the Neve Portico 5016, the Radial Phazer and Audient Mico, and software like Tritone Digital's Phase Tone, allow you to decide which frequencies add constructively to your sound and which phase-cancel.

Some devices, including the Neve Portico 5016, the Radial Phazer and Audient Mico, and software like Tritone Digital's Phase Tone, allow you to decide which frequencies add constructively to your sound and which phase-cancel.

Hardware units such as the Neve Portico 5016, the Little Labs IBP, the Audient Mico and Radial's Phazer all enable you to make variable phase adjustments. They use a phase-shifting circuit, which enables the user basically to decide which frequencies add constructively and which still cancel — allowing the subjective 'focus' of a sound to be optimised, or the sound quality from the combination of DI and miked signals to be tweaked in a satisfying way. Similar tonal shaping options are offered by software such as Tritone Digital's Phase Tone, and can be used to tweak the sound during the mixing stage — though, of course, it is better to get the sound right during recording.

Hardware units such as the Neve Portico 5016, the Little Labs IBP, the Audient Mico and Radial's Phazer all enable you to make variable phase adjustments. They use a phase-shifting circuit, which enables the user basically to decide which frequencies add constructively and which still cancel — allowing the subjective 'focus' of a sound to be optimised, or the sound quality from the combination of DI and miked signals to be tweaked in a satisfying way. Similar tonal shaping options are offered by software such as Tritone Digital's Phase Tone, and can be used to tweak the sound during the mixing stage — though, of course, it is better to get the sound right during recording.